A Practical Guide to Understanding the AI Stack for GxP Teams

A practical guide to the six-layer AI Stack for GxP teams, covering validation focus areas, risks, and regulatory alignment.

David Iroaganachi

12/12/20254 min read

Generative AI is already in your organisation—drafting emails, summarising reports, and assisting reviews. More demos and "AI use cases" appear daily, usually focused on what the user sees: the interface, the workflow, the chat experience.

But the UI is only the surface.

A common misconception is that “AI” is a single model, when even the simplest use case depends on multiple layers working together. Each layer behaves differently, introduces different risks, and requires its own controls.

This article outlines the AI Stack for GxP teams, providing a practical look at the layers that matter for validation, governance, and compliant adoption.

What Is an AI Stack?

An AI Stack is a collection of layered technologies, frameworks, and infrastructure components behind both traditional and modern AI systems ranging from the model to the user interface. Each layer has different owners, different risks, and different validation requirements.

Why it Matters

Understanding the AI stack helps GxP teams pinpoint where validation should focus, avoiding common pitfalls such as validating the wrong component, missing compliance gaps, or misunderstanding vendor claims. It also provides a clear, defensible way to define validation boundaries that auditors can follow.

Regulators may not use the term “AI stack,” but they do expect documented controls at every architectural layer. The stack offers a practical way to show where those controls live.

It also turns vendor assessments from black-box demos into targeted questions: Which LLM version? How is data segregated? Who maintains each layer?

This shared structure helps bridge the gap between CSV, QA, Digital, IT, and Data teams—who often see different parts of the system but rarely share the same mental model.

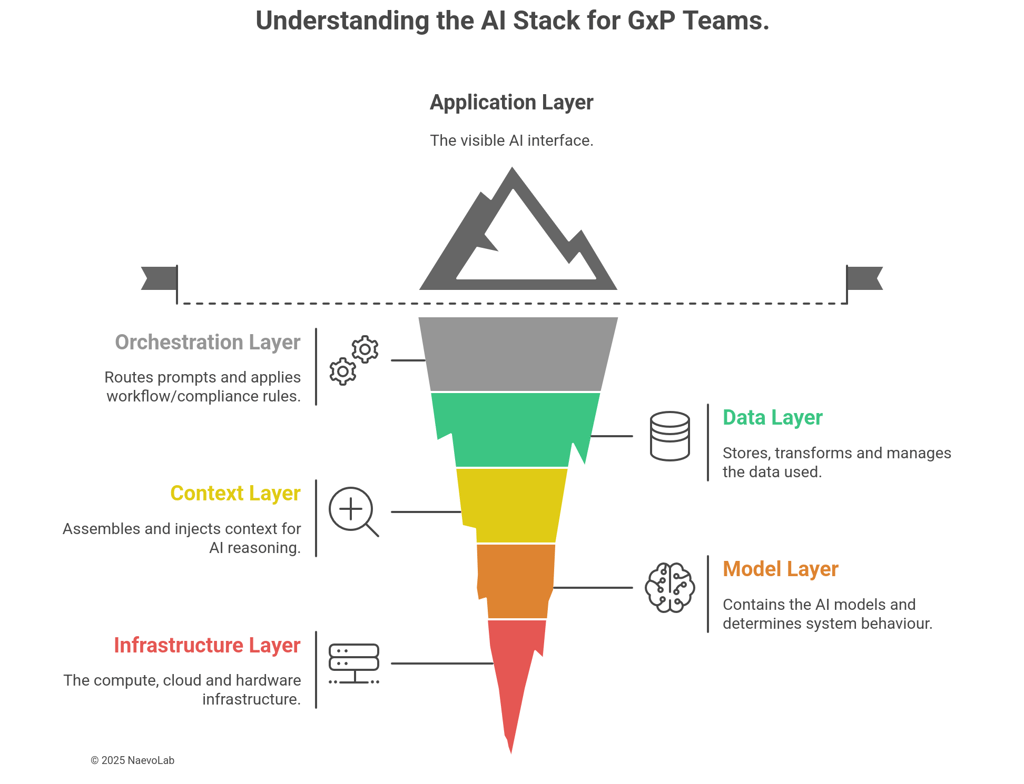

The AI Stack: A Six-Layer Model

For this article, we use a six-layer model of the AI stack:

Application Layer

Orchestration Layer

Data Layer

Context Layer

Model Layer

Infrastructure Layer

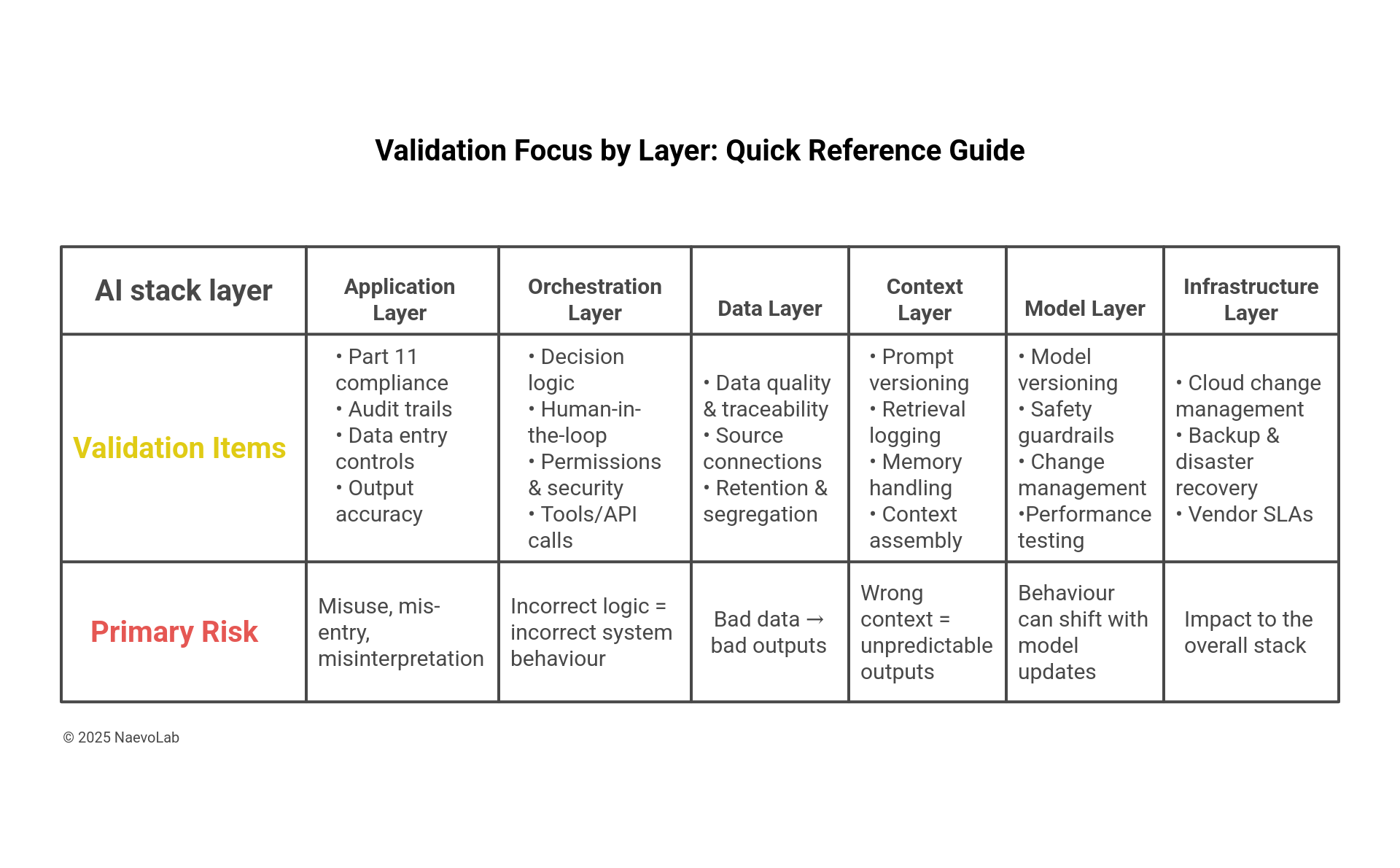

Below is a quick reference showing key validation items and primary risks for each layer:

The exact approach for each layer will vary based on GAMP software category, intended use, risk level, whether the solution is custom-built or vendor-provided, and which components are configurable.

While real-world implementations may include additional components or feedback loops, the layers themselves remain consistent—providing a foundation for understanding validation boundaries and making informed, risk-based decisions.

1 — Application Layer (UI / User Interface)

This layer is the most visible, as it is where AI outputs are displayed and user inputs are captured. It includes dashboards, forms, chat interfaces, and any UI elements that shape how users interact with the system.

From a validation perspective, this layer focuses on intended use, data entry controls, output presentation, error handling, and audit trails. Part 11 controls must be evident here. While this layer doesn’t determine how the AI works, it defines the user experience and is often where potential compliance issues surface first.

2 — Orchestration Layer (Routing, MCP, Agents)

The Orchestration Layer manages how system components coordinate. It sits between the UI and the model and controls overall system behaviour, including prompt routing, workflow logic, guardrails, business rules, and tool or API calls—such as Model Context Protocol (MCP) servers for tool integration and automation.

From a validation perspective, this layer defines decision logic, context injection, security checks, permissions, error handling, and data flow to the model. Changes here directly affect reliability, traceability, and the overall risk profile.

3 — Data Layer

This layer covers data lakes, warehouses, and unified storage systems, including reference data, metadata, structured and unstructured sources, vector databases, and connections to systems like MES, LIMS, and SAP.

It also manages how data is prepared, transformed, stored, and retrieved.

From a validation perspective, this layer focuses on data quality, integrity, traceability, and access controls. Changes to data sources or retrieval logic can directly influence model behaviour and must be risk-assessed and monitored

4 — Context Layer

The Context Layer decides what the model actually sees before it reasons. It assembles the right information—RAG results, document chunks, prompt templates, tool outputs, and guardrails—and injects them into the model at the right moment.

For example, when a user asks about a validation SOP, this layer retrieves the relevant sections and formats them before the model responds.

From a validation perspective, this layer manages context traceability, retrieval logging, prompt versioning, guardrails, memory/state handling, and input/output checks.

It directly influences the accuracy, reliability, and auditability of AI behaviour.

5 — Model Layer

The Model Layer covers the AI model itself—whether a frontier LLM, a fine-tuned variant, an open-source model, or a vendor-provided model.

From a validation perspective, this layer focuses on model selection, configuration, versioning, safety behaviour, performance testing, and change management. Model behaviour and update patterns must be understood and documented, typically through system or model cards.

For closed-source models, validation relies mainly on vendor transparency and API controls. For open-source or fine-tuned models, direct testing and performance qualification are essential.

6 — Infrastructure Layer

The Infrastructure Layer covers the compute, storage, networking, and cloud services (Azure, AWS, GCP) that support the AI system.

From a validation perspective, this layer focuses on availability, reliability, security controls, backup and recovery, change management, and supplier assurance. Cloud updates or misconfigurations can impact the entire AI stack.

This layer also covers IaaS responsibilities and SaaS dependencies, as well as on-prem servers, local GPU hosts, and edge deployments, which introduce additional security and lifecycle considerations.

Issues here affect the entire stack.

Conclusion

The AI stack gives GxP teams a structured framework to understand, evaluate, and validate modern AI systems. By defining clear layer boundaries, teams can move from vague “AI validation” concepts to focused, risk-based approaches aligned with FDA CSA, GAMP 5, and the NIST AI RMF.

Each layer can be assessed and improved independently—enabling iterative enhancement rather than full system overhauls. This shared structure improves communication across QA, CSV, IT, and business teams.

As AI adoption accelerates, understanding the stack becomes foundational for evaluating vendor solutions, asking better questions, and building compliant systems that scale beyond traditional IQ/OQ/PQ.

NaevoLab explores validation and emerging technologies in life sciences through independent, practical insights.

More resources are available at naevolab.com