EU New Annex 22 Draft on AI: Key Takeaways

Covers the EU Annex 22 AI draft for validating static and generative models in GxP.

David Iroaganachi

9/4/20253 min read

Introduction:

In my previous article, I explored the EU AI Act — a broad regulation setting the governance framework for AI across industries within EU. In this article, we will focus on the recent draft of the EU Annex 22, which is specifically aimed at GxP environments.

The draft focuses on AI/ML systems — particularly static models — and provides guidance on how they should be validated and managed within controlled GxP settings.

Annex 22 – Scope and Focus

Annex 22 builds on Annex 11 by introducing AI-specific guidance for regulated environments. As outlined in the draft, the focus is primarily on static machine learning models — systems trained on a fixed dataset and locked into the model. These models do not continue learning during use. They are considered deterministic, meaning the same input will consistently produce the same output.

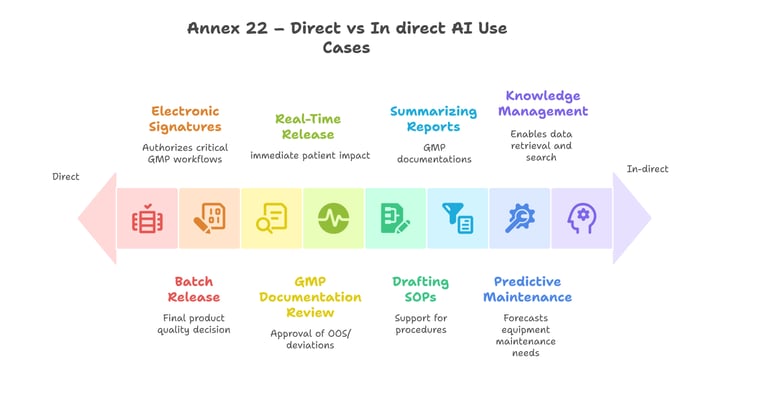

According to the draft, these static models can be used in critical GMP processes that have a direct impact on patient safety and product quality. In contrast, generative AI — such as large language models (LLMs), which are considered non-deterministic — may be suitable only for non-critical processes that have an indirect impact on safety and quality.

This distinction reflects similarities with the EU AI Act’s high-risk classification approach, reinforcing how both frameworks assess AI systems based on their intended use and level of risk.

The diagram below provides a simplified illustration of how these categories might be interpreted in a GMP context:

Simplified illustration of Annex 22's distinction between direct(critical) and indirect (non-critical) AI use cases

✅ Rule of thumb:

"If the AI’s output directly changes a GMP decision (release, reject, pass, fail, approve), it’s critical (direct impact).

If the AI’s output only supports and still requires human review and approval, it’s non-critical (indirect impact)."

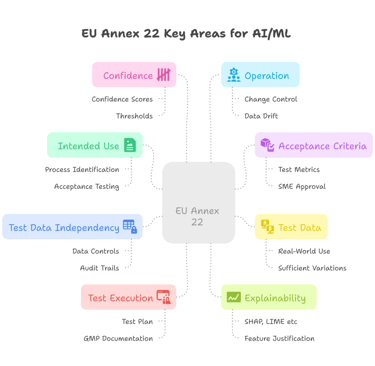

The Key Areas of EU Annex 22

Beyond distinguishing between direct and indirect use, Annex 22 also outlines several key areas for how AI/ML models should be validated and managed within GMP environments:

These key areas reflect a shift toward structured, lifecycle-based oversight of AI/ML systems — moving beyond black-box tools into traceable, explainable, and risk-aware implementations.

Intended Use – Models must have a clearly defined purpose, supported by documentation and acceptance testing — much like a validation plan.

Acceptance Criteria – Models need clear test metrics and SME-approved acceptance criteria before testing. Performance must be at least as good as the process being replaced, with no drop in productivity or quality.

Test Data – Data should reflect real-world use, cover variations, and be large enough for reliable results. Preprocessing must be justified; generative AI cannot be used to create test data without strong justification.

Test Data Independence – Training and test data must remain separate, with strict controls and audit trails. Those who train models should not also test them (“four-eyes principle")

Test Execution – Models must be shown fit for intended use, with agreed test plans, metrics, and documentation. All deviations must be recorded.

Explainability – Tools like SHAP, LIME, or heat maps should make reasoning visible, avoiding the “black box” issue

Confidence –Models must log confidence scores and apply thresholds. Outputs with low confidence should be flagged as “undecided” — it’s safer to escalate than risk a wrong decision.

Operation – Validated models must remain under change and configuration control, with ongoing monitoring for performance and data drift. In human-in-the-loop setups, operator reviews and rechecks are required.

Key Takeaways

Deterministic scope: Annex 22 focuses on static models that produce predictable outputs. Generative AI and LLMs are excluded from critical GMP use but may still support non-critical tasks.

Performance bar: Where AI/ML replaces an existing process, performance must be at least equal — ideally better — in both quality and productivity.

Clearer risk lines: The distinction between direct (critical) and indirect (non critical) AI uses gives teams a practical basis for risk assessments.

Documentation: Annex 22 reinforces that validation evidence, test records, and decision rationale must be fully traceable.

Annex 22 builds on Annex 11 with specific guidance for AI/ML in regulated systems. It aligns with the EU AI Act’s risk-based approach: static, validated models may be used if properly risk-managed. Generative AI remains excluded from critical GMP decisions. This provides clear direction for applying AI within a risk-based framework.

🔍 Consideration: While Annex 22 discourages the use of generative AI and LLMs in critical systems, it is worth noting that these models can also be trained and locked in a static form. The challenge lies in their outputs, which may still vary — making them difficult to align with the deterministic view of “static” outlined in the draft.

High level overview of the key area outlined in the Annex 22 draft