The EU AI Act: Key Points for GxP Teams

A quick breakdown of what the EU AI Act means for GxP teams.

David Iroaganachi

7/12/20253 min read

With AI governance gaining momentum in regulated industries, this article takes a quick look at the EU AI Act to provide a sense of the road ahead.

A Quick Look at the EU AI Act:

As the EU AI Act is the first legislation of its kind, I chose to begin here because it sets the foundation for how AI systems will be classified and governed. While GxP isn't directly mentioned, the Act introduces a risk-based classification system — from minimal to high risk. Regulated systems including GxP systems are likely to fall under the high-risk category due to their potential impact on health and safety, particularly as referenced in Annex III.

This article focuses on Chapter 3 — High-Risk AI Systems — which I believe offers the most practical relevance for teams in regulated environments.

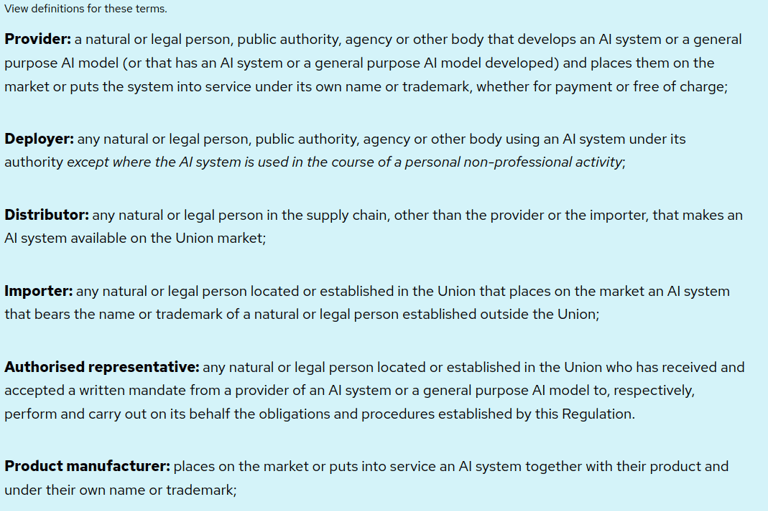

In addition to classifying AI systems by risk, the EU AI Act also defines roles for entities involved in their use. For GxP environments, organizations would typically be classified as “Deployers” — especially when AI is used in validated processes such as automation, analytics, or decision support

"Deployer: Any natural or legal person, public authority, agency, or other body using an AI system under its authority, except where the AI system is used in the course of a personal non-professional activity. "

Key Highlights from the EU AI Act :

- Risk-Based Classification: AI systems are categorized into unacceptable, high-risk, limited-risk, and minimal-risk levels. Most GxP use cases (e.g., manufacturing, quality control, clinical systems) are likely to fall under the high-risk category.

- Annex III – High-Risk AI Use Cases: While not GxP-specific, Annex III includes domains like medical devices and safety-critical systems—many tools used in regulated manufacturing and clinical workflows will fall within this scope.

- Article 9 – Risk Management System: Requires a documented, lifecycle risk management process for high-risk AI systems—closely aligned with GxP quality risk management principles.

- Article 10 – Data and Data Governance: Mandates high-quality, well-governed datasets to reduce bias and ensure accuracy — Similar to ALCOA+ expectations for data integrity.

- Article 13 – Transparency and Provision of Information to Deployers: Requires clear instructions and data logging — reinforcing traceability, audit readiness, and ties into Article 12 – Record-Keeping for lifecycle data control.

- Article 14 – Human Oversight: Ensures that High-risk AI systems must enable effective human monitoring and intervention.

- Article 15 – Accuracy, Robustness, and Cybersecurity: Requires systems to be accurate, resilient, and secure, with safeguards like feedback loops and protection against cyber threats.

- Article 26 – Obligations of Deployers: Deployers must monitor, retain logs, report incidents emphasising traceability, and risk management.

Key Take Away

GxP Not Explicitly Mentioned: The EU AI Act does not directly reference GxP, but its broad scope means many GxP-related systems may still fall under the high-risk category — especially those impacting patient safety, product quality, or data-driven decisions.

The Act emphasizes: Traceability, Risk management and Data integrity — all foundational principles for regulated environments.

Human-in-the-loop (HITL) oversight could become a foundational requirement across all future regulations for high-risk AI systems.

Entity Roles May Overlap: Organizations might simultaneously fall into more than one category under the EU AI Act, such as a pharma manufacturer being considered both a Provider and a Deployer.

Looking Ahead: The Act lays the groundwork for future AI compliance, particularly in life sciences and other regulated sectors.

Implementation Timeline: The rollout spans from 2024 to 2031, with key milestones along the way.

🔍 In practical terms, teams may focus on areas like data governance, lifecycle documentation, bias and explainability, risk-based model validation, and post-deployment monitoring. These pillars mirror the principles of the EU AI Act and align with familiar GxP expectations under CSA and data integrity.

🗓️Chapter III of the EU AI Act — which covers High-Risk AI Systems — is due to come into effect on 2 August 2026.

Source: European Commission – https://artificialintelligenceact.eu/